Revolutionary becomes Evolutionary

Recently, I discussed a lot with friends and colleagues about new mobile devices. Using Windows Mobile for years, I switched to Apple’s iPhone 3GS three years ago. Before, I talked quite a lot with a friend who recently bought one at that time.

Before, I used an iPod for listening to music and Windows Mobile devices such as the HTC Hermes or the HTC Touch Pro for quite a few years. Over time, I got annoyed by always carrying two devices, two power plugs, two connector cables and by managing at least two different applications to sync both devices. Eventually, my decision to buy an iPhone was driven by quite rational thoughts.

I was pleased with the hardware quite a lot, never worried about the processor, ram or other components of the device. The only drawback for me as an developer was the fact that you cannot simply deploy your home brewed application to the device.

I skipped two generations of the iPhone, finally rethinking of getting a new device. What shall it be? Meanwhile, I am quite off the track developing for Windows Mobile. Also, the hardware fragmentation for Windows devices is quite a bit. Similar situation with Android based devices. Which one is the reference hardware to buy? While the idea of developing for the Android platform is tempting, there are more facts to consider.

After three years, I have to admitt, the ecosystem lock in is quite a reason. IPad (first gen but with 3G), an almost retina but bought a few months to early MacBook and quite a lot of periphery to use with my devices is a good reason to stay. Nevertheless, with the new Lightning connector many peripheral devices became obsolete.

Much more than the hardware lock in is definitely a data lock in. Dozens of apps with your data, synced address books and calendars, lifelogging and quantified self data collected over the years is a good reason to stay with the current platform.

With the release of the new iPhone there is a lot of making-fun-of-the-new-iphone going on, however considering the facts above you see there are simple reasons to stay with a platform. This is definitely a goal of every manufacturers, and Apple plays this this game very well.

Looking at the new hardware, iOS 6 as well as the new Mac OS, there is no rocket science, there is no Star Trek communicator and no universal translator comming with new iPhone. There is no revolution, simply a technological evolution of a long designed system. A system that grew five generations.

Personally, I think a steady evolution of technology is worth quite a lot. I don’t want to migrate all the data, I don’t want to worry about the hardware to buy, I don’t want to learn new user interfaces and usability concepts for now. I want a device being part of my daily (business) life, easy to use, sitting in my pocket being available when needed. With the current evolution of the iPhone this should be possible for the next one or two hardware generations.

Said that, it will be a 64GB iPhone in white for me while it will be a Nokia Lumina 900 or a Google Galaxy Nexus for others due to the same or similar reasons mentioned above.

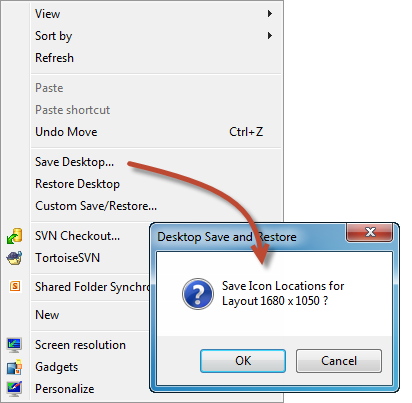

I continually move between different office places using different setups for monitors with my laptop. Sizes, numbers and orders of the monitors vary from place to place. As a consequence, you either deal with a complete mess on your desktop or you spend several hours per week in rearranging icons on your desktop.

I continually move between different office places using different setups for monitors with my laptop. Sizes, numbers and orders of the monitors vary from place to place. As a consequence, you either deal with a complete mess on your desktop or you spend several hours per week in rearranging icons on your desktop.